In this project, we will use machine learning algorithms to perform image classification. Image classification is the task of predicting the class, or label, of an image out of a set of known images. This is a type of supervised learning, which refers to algorithms that learn a function from labelled datasets.

We will be writing algorithms to do image classification on the MNIST dataset. MNIST consists of tiny 28×28 pixel images of handwritten digits from 0 to 9. A few example images from each are shown below.

MNIST has 60,000 images to train a classification algorithm, each labelled with the correct digit. In addition, there are 10,000 images which are used for testing the accuracy of the algorithm. We will implement machine learning algorithms to classify images.

This project is implemented using the Python programming language. We will be using the Python libraries NumPy and SciKit Learn. Before you start, make sure you have watched the Module 4 lectures.

The following topics are covered on this page:

Getting the Code

Parts 1 and 2 of this project are completed using Jupyter Notebooks which we will run in Google Colab. To accept the assignment, open the template notebooks using the links provided by your instructor and select File > Save a copy in Drive to save a copy in your Google Drive. There are two notebooks, one for each of Parts 1 and 2.

To get the template code for Part 3 of this project, see your course page for the GitHub Classroom assignment link. Part 3 will run on the robot. Modify the LICENSE.txt file in your GitHub repository to include team member names and the year. Make sure the change is committed to your repository.

-

P4 License: In the file

LICENSE.txt, replace<COPYRIGHT HOLDER>with your name. Replace<YEAR>with the current year.

Project Description

In this project, you will use various machine learning algorithms for image classification on the MNIST dataset. Then, you will use the best algorithm to perform image classification on the robot in a museum tour guide task.

- Part 1: Nearest Neighbors in Python

- Part 2: Training Machine Learning algorithms with SciKit Learn

- Part 3: Robot Museum Tour Guide

Parts 1 and 2 are done inidivually, using Google Colab. We have provided a Google Colab notebook which introduces the concepts of Jupyter Notebooks and using NumPy, available as a practice activity.

Part 1: Nearest Neighbors

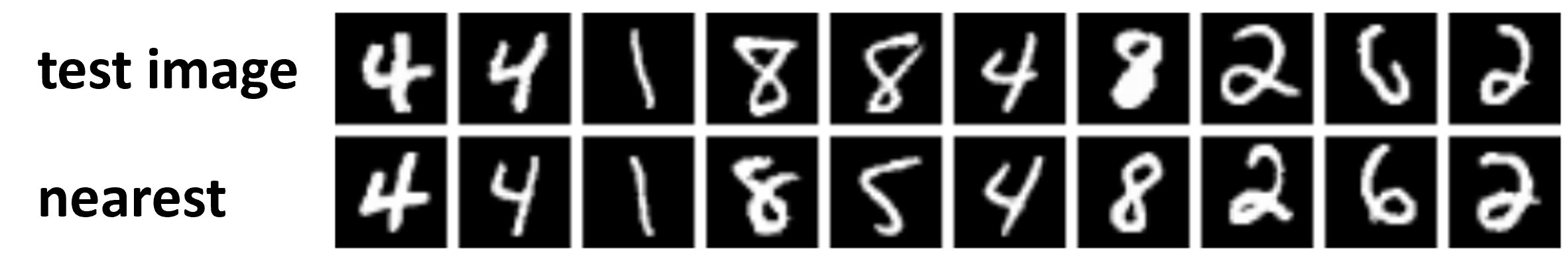

In Part 1 of this project, you will implement a Nearest Neighbor classifier. The nearest neighbor algorithm takes a test image and finds the closest train image, which we call the image's nearest neighbor. Then, it assigns the test image the same label as its nearest neighbor. Here is an example of some MNIST images and their nearest neighbors:

Open the notebook Nearest Neighbors.ipynb using the Google Drive link provided, and save a copy in your Drive. The notebook is made up of cells, which can be text cells (in Markdown) or code cells (in Python). You can run a cell by pressing the play button ( ) in the top left corner of the cell.

You need to run a cell before you use anything it defines in another cell. For example, you should always run the imports cell when you start the notebook. Similarly, you must run a cell which defines a variable or function before running a cell that uses it. Start by running the import cell at the top of the notebook to import some dependencies we'll need later on.

The text in the notebook contains descriptions of how the data is stored and how distance should be calculated. Places where you need to write code are noted in the comments, with the label TODO. Use the provided test cells to check that your functions are working correctly.

Part 2: Training Machine Learning Algorithms

In this part, we will use a Python library called SciKit Learn. This library has implementations for multiple machine learning algorithms. We will focus on three of them:

We will use the SciKit Learn implementations for each of these. Your job will be to train the best versions of the K-Nearest Neighbors and Neural Network algorithms using Cross Validation. The Linear Classifier will be used as a baseline (your Neural Network must outperform it!).

Open the notebook Machine Learning with SciKit Learn.ipynb using the Google Drive link provided, and save a copy in your Drive.

The text in the notebook guides you through each task. Places where you need to write code are noted in the comments, with the label TODO.

The end of this notebook contains a cell which allows you to aave your favorite classification algorithm to a file. You should run it, then download the file for Part 3. With your partner, select the best algorithm from both of your notebooks to use.

Part 3: Robot Museum Tour Guide

Once you have trained a machine learning algorithm to recognize digits, you will use it on the robot to detect digits using the robot's camera. The robot's task will be to detect images in a museum (represented by a maze), and use the result to select which museum location to go to next. The waypoints corresponding to each digit will be provided by the instructors. The robot will use planning code from Project 3 to drive between the waypoints. Here is a visualization of the final demo:

The instructors will provide a list of digits and their corresponding waypoints.

Not all digits will be represented.

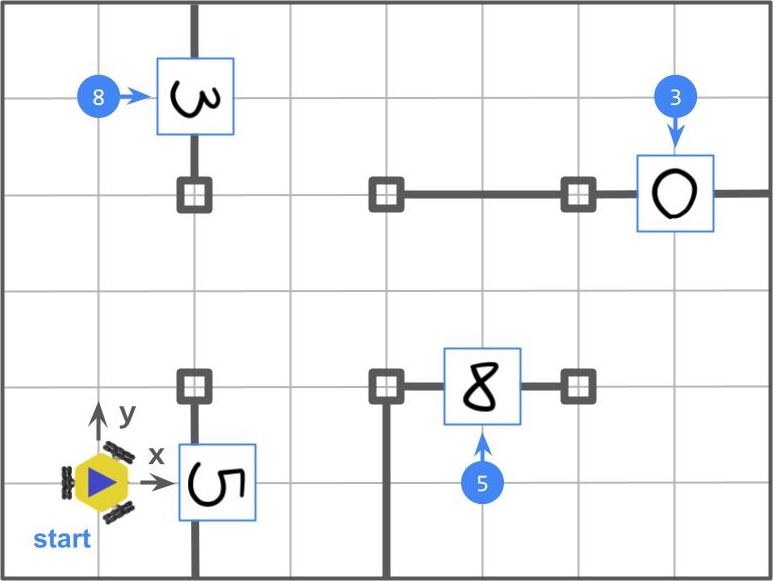

Here is an example waypoint course configuration, where the digit of interest is followed by a waypoint, in format [x, y, theta] (positions are in meters and angles are in radians):

0: [0, 0, 0]

3: [3, 2, -PI / 2]

5: [2, 0, PI / 2]

8: [0, 2, 0]This configuration corresponds to the following map:

The waypoints are labelled in blue circles, and correspond to the waypoints given in the course configuration. On the wall facing the given configuration, there will be a handwritten digit (as shown in the image). The digit tells the robot which waypoint to visit next. All waypoints in the configuration will have a handwritten digit at the corresponding map location.

The robot will always start at [0, 0, 0]. A handwritten digit on the wall of the maze will tell the robot which waypoint to visit first. When the robot detects the digit "0", it should return to the zero position, and stop execution (i.e. it should not travel back to the waypoint it detects at the origin). In the above example, the robot's trajectory should be: "0" → "5" → "8" → "3" → "0".

You will receive full points if the robot follows the path correctly as described by the configuration. You should print out the digit detected at each waypoint. You will not lose points for up to 1 incorrect detection. You must deal with the case where the robot detects a digit which does not correspond to a valid detection.

Robot Setup

You will use your code from Project 3 to generate plans between waypoints, and your model output from the Colab notebooks in Part 2 to detect digits. You can use the planning binary and classification model from either partner. Follow these steps to set up your robot:

- Upgrade Python's installer, Pip:

pip install --upgrade pip - Clone your team's repo onto the robot.

- Install the dependencies for the project:

cd robot-tour-guide-f23-[TEAM] pip install -r requirements.txt - Get the path planning executable from Project 3. This file gets placed in the

builddirectory of the Project 3 repository and is calledrobot_plan_path. Place this file in thebinfolder in your Project 4 repository. - Get the model from your Colab notebook. Place it in your repository. Update the variable

PATH_TO_MODELin the filerobot_tour_guide.pyto contain the path to the file.

Now you are ready to start coding!

Robot Code

Before running your code, you must create a map and be properly localized within it. Follow the localization instructions.

A number of helper functions have been created for you. You must follow the steps in Robot Setup before writing or running any code.

Robot Functions: The Robot helper allows you to drive the robot, plan to and execute a path, and turn to a goal. Use this functionality as follows:

robot = MBot() # This initializes communication with your robot.

plan_to_pose(x, y, robot) # Use this to drive to a goal location.

turn_to_theta(theta, robot) # Use this to turn to a goal angle.Camera Functions: The camera helper looks for Post-It notes in the camera image, and returns a cropped and scaled image which is the correct size to pass through your classification algorithm. To use it, do:

ch = CameraHandler() # This variable is defined for you in the template.

frame = ch.get_processed_image() # Get the image of a digit from the camera.If a digit is not detected in the frame, it will return None. You should handle this case. To save the image to the folder output, pass save=True to the above function. This will also save images at all stages of preprocessing. This might be useful for debugging.

To perform prediction on the frame using your model, do:

y_pred = model.predict([frame])[0]Waypoints and Labels:

A helper script has been provided to store and load waypoints paired with labels. See the intructions in README.md or run python3 waypoint_writer.py and follow the prompts. You will need to store waypoints and labels any time you move to a new course or update your map.